What happens when a government attempts to shut down one of the most popular social media platforms to combat foreign propaganda?

In my recently accepted article for The Journal of Politics, I examine the effect of the Ukrainian ban on Russian social media to draw lessons on the use of censorship against information threats in times of war. I argue that such bans may work under the right conditions – not because people cannot access forbidden websites, but because it is too troublesome to do so.

The question is of high importance because state and non-state actors worldwide are increasingly leaning towards different degrees of online censorship to combat foreign influence campaigns, disinformation, propaganda, and other forms of harmful information. The examples of this are numerous.

In 2017, Germany passed a law against hate speech and other harmful information by forcing internet companies to remove certain kinds of offensive content. The following year, France passed a law to regulate election-related ‘fake news’. The United States (US) is no exception, and in 2020 the Trump administration threatened to close Chinese TikTok while referring to national security concerns. This year, Parler, an American microblogging and social networking service, was temporarily shut down, because Amazon and other tech giants withdrew their services to the app. This happened moments after the platform was used by right-winged radicals to coordinate the attack on the United States Capitol in 2021.

Ukraine offers one the most extreme examples of this trend among liberal democracies. In 2017, the Ukrainian government forced internet service providers to block access to VKontakte, the Russian equivalent to Facebook, alongside other websites.

The long-term goal behind the ban is to curb Russian propaganda and surveillance of Ukrainian citizens during the ongoing war with Russian-backed separatists in the country. In many ways, the scale of the ban would be equivalent to Facebook being blocked in the UK or the US. Nevertheless, the censorship policy is still considered to be “soft”, because users can legally bypass the ban by using VPN.

The exact timing of the ban in 2017 was largely unexpected and therefore “exogenous” (from the point of view of ordinary users) and therefore the ban enables a “natural experiment” with a relatively clear distinction between the time before and after the “treatment” (i.e., the censorship policy). I measure activity as the number of wall posts on VKontakte uploaded by the individual users.

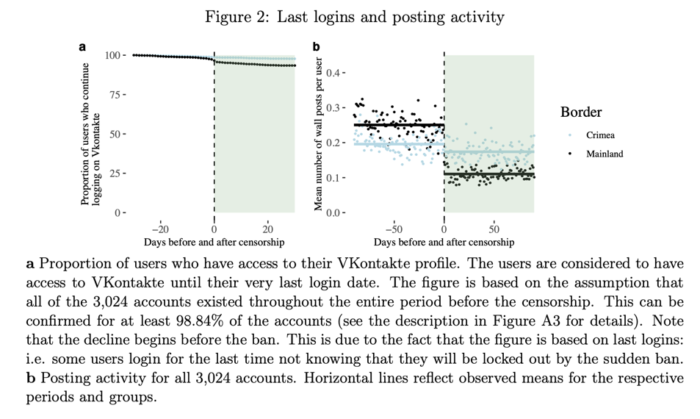

As an additional control, I compare users in Crimea with those living up to 50km north of the Crimean border with “Mainland” Ukraine. The ban was only implemented in Mainland and prior to the ban, the online activity on both sides of the border followed a similar trend. As such, Crimea is used to approximate a contrafactual scenario of what the online behavior would have looked like in Mainland if the ban had not taken place.

I find that at least 90% of users in the censored areas (in Mainland) found a way to circumvent the ban and continued logging on to VKontakte at least one month after the ban (Figure 2a in the original manuscript) – most likely through VPN. However, despite them having knowledge about how to bypass the ban both legally and technically, the censorship policy has nonetheless still greatly reduced their daily activity on VKontakte (Figure 2b) in censored “Mainland” north of Crimea (where the ban is not imposed).

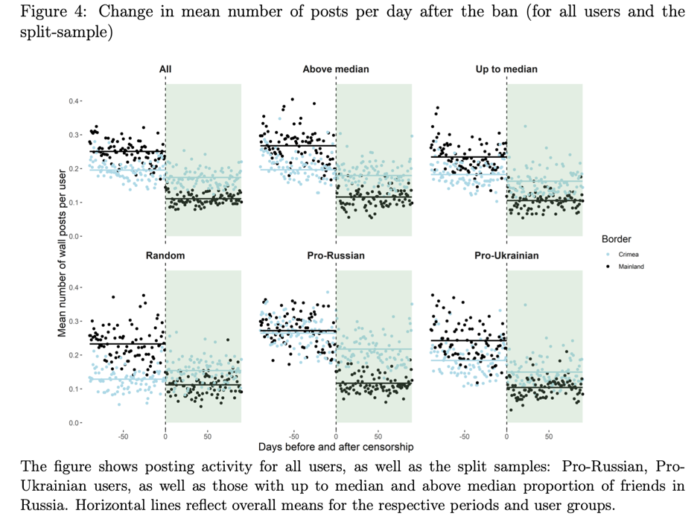

Most importantly, I find that the effect of the “patriotic” and “anti-Russian” ban on VKontakte activity is as great among pro-Russian users as pro-Ukrainian users (Figure 4 in the manuscript). Indeed, the Ukrainian ban succeeded precisely because pro-Russian users did not resist more against the censorship policy – at least when it comes to their actual online behavior.

This finding is surprising in the heavily politicized context of the war in Ukraine. Essentially, this suggests that the way users respond to censorship has little to do with their political attitudes towards the state. Instead, the online behavior appears to be mainly driven by how difficult and time-consuming it is to access a forbidden website. In this case, the Ukrainian state simply slowed down the login process by a few seconds (i.e., by forcing users to install and use a VPN).

This pragmatic or “apolitical” pattern has also been observed by researchers in China. The findings are in line with the “cute cat theory”, which suggests that our online behavior is driven to a higher extent by our search for easily accessible entertainment than it is driven by politics. This has been one of the most important and provoking lessons for me while writing the research article as part of my PhD dissertation in political science.

Despite what often seems like constant quest to find politics everywhere and in everything, maybe politics is not always the driving force as we tend to make it out to be. This does not mean that the consequences of relatively apolitical behavior are not political, on the contrary.

The Ukrainian example illustrates how easily one of the most popular social media platforms can be disrupted under the right conditions – even in a more democratic context. This gives rise to the difficult question of whether the use of censorship against hostile media in times of war can be normatively justifiable? This is of course a question that I cannot do justice to answer in the context of this brief blog post.

One thing, however, does seem relatively clear. The potential vulnerability of social media may have severe consequences for internet freedom around the world. For example, what if the Russian government attempted to block Facebook, as they previously threatened to do? This result suggests that in that case, we would witness a similar outcome.

On that backdrop, it is important to stress, that online bans do not necessarily lead to “success”. In the research article, I theorize that such interventions may disrupt online activity if they meet at least three criteria: 1) the ban actually slows down the connection to the forbidden website, 2) the users have the option to replace the forbidden source with a similar non-banned alternative, and 3) the banned service is already well known to the general public.

What researchers and the public really need is more empirical research that test existing theories about how and why censorship works. This is an important step to truly map out the vulnerabilities of our global social media infrastructure – an infrastructure which in today’s tech-based societies constitutes a crucial part of our lives.

This blog piece is based on the article “Fighting Propaganda with Censorship: A Study of the Ukrainian Ban on Russian Social Media” by Yevgeniy Golovchenko, forthcoming in the Journal of Politics, April 2022. The empirical analysis of this article has been successfully replicated by the Journal of Politics and replication materials are available at The Journal of Politics Dataverse.

All of the figures originate from the forthcoming research article in The Journal of Politics. The article is part of the Digital Disinformation and DIPLOFACE projects, which are funded by the Carlsberg Foundation and the European Research Council respectively.

About the author

Yevgeniy Golovchenko- University of Copenhagen

Yevgeniy Golovchenko is a postdoc at the Department of Political Science at the University of Copenhagen and affiliated with the Copenhagen Center For Social Data Science. His research interests include censorship, digital diplomacy, propaganda, and disinformation in the context of international conflict. You can find further information regarding his research on his website and follow him on Twitter @Golovchenko_Yev.